Improving Depth Perception in RGB-D SLAM by Data Fusion from Sensors with Different Measurement Principles

This subpage presents the dataset gathered with Asus Xtion and MESA SwissRanger SR-4000 that were used to prove the gains of depth fusion in SLAM system. The article titled ”Improving Depth Perception in RGB-D SLAM by Data Fusion from Sensors with Different Measurement Principles” was submitted to Transactions on Industrial Informatics to special session Multisensor Fusion and Integration for Intelligent Systems.

Abstract

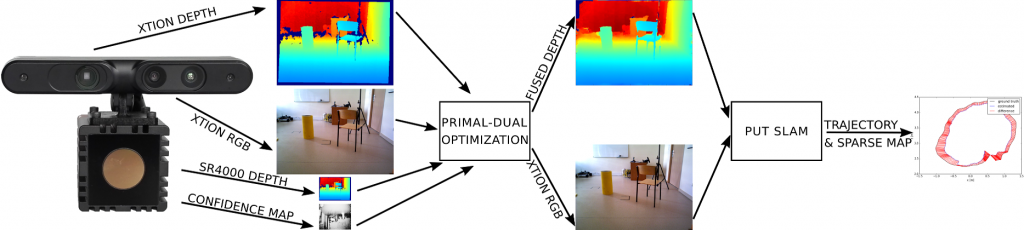

In the last decade, Simultaneous Localization and Mapping (SLAM) methods based on RGB and depth data became popular. They are mainly using structured light sensors. Time-of-flight cameras are rarely used in SLAM. The range measurement methods used in these sensors have different characteristics, hence distinct uncertainty and artefacts. Therefore, we develop an algorithm for depth data fusion from two different sensors and test the fusion results in SLAM.

We use the convex optimization for data fusion, and demonstrate that it yields better results than the use of individual depth channels in RGB-D SLAM. The experimental evaluation is based on data from a calibrated rig (Asus Xtion, MESA SwissRanger). The accuracy of the estimated trajectories is evaluated with the ATE and RPE metrics. The data sets are publicly available.

The new solution, called RGB-DD, is providing SLAM with fewer errors caused by inaccuracies in the depth images.

Gathered data with handheld sensor rig with ground truth files:

The 7zip file consists of following directories:

- camera_calibration_data

Information about intrinsic and extrinsic calibrations - exp1

- exp2

- exp3

Each experiment (exp1/exp2/exp3) consists of directories:

- Asus

Containing RGB and depth images recorded with Asus Xtion. - Mesa

Containing depth and uncertainty images recorded with MESA SwissRanger SR-4000. - AsusMesaFused

Containing depth images created with proposed fusion of asus and mesa measurements. - MesaRegistered

Containing depth information from MESA registered to Asus coordinate system and rescaled to 640×480.Gathered data with real robot with ground truth files:

- PUTAM Robot Experiment 1 (392 MB)

- PUTAM Robot Experiment 2 (349 MB)

- PUTAM Robot Experiment 3 (820 MB)

Each 7zip file consists of groundtruth.txt file and following directories:

- Asus

Containing RGB and depth images recorded with Asus Xtion. - Mesa

Containing depth, intensity and uncertainty images recorded with MESA SwissRanger SR-4000. - AsusMesaFused

Containing depth images created with proposed fusion of asus and mesa measurements. - MesaRegistered

Containing depth information from MESA registered to Asus coordinate system and rescaled to 640×480.