Products of experts

for robotic manipulation

POLONEZ BIS -1 project financed by

the National Science Centre (NCN)

Autonomous robotic manipulation in novel situations is widely considered as an unsolved problem. Solving it would have enormous impact on society and economy. It would enable robots to help humans in factories, warehouses, but also at homes in everyday tasks.

This project will advance the state of the art in learning algorithms which will enable a robot to autonomously perform complex dexterous manipulation in unfamiliar situations in the real-world with novel objects and significant occlusions. In particular to grasp, push or pull rigid objects, but also to some extent, non-rigid and articulated objects that can be found at homes or in warehouses. Importantly, similarly to humans, a robot will also be able to envision results of its actions, without actually performing them. A robot could plan its actions, e.g. to tilt a low object to be able to grasp it, to bend a cloth before gripping and then pulling, to slide a sheet of paper to the edge of a table, or to open a door.

End-to-end/black-box approaches have made it possible to learn some of these kinds of complex tasks with almost no prior knowledge, for example by learning RGB image to motor command mapping directly. While this is impressive, typically the end-to-end manipulation learning requires prohibitive amounts of training data, scales poorly to different tasks, does not involve prediction and struggles to provide interpretation of its behaviour, why the learned skill fails. On the other hand, it was shown that exploiting the problem structure can help learning. For example, in bin picking scenarios, many successful approaches assume at least depth images or trajectory planners for robot control. In autonomous driving, adding representations such as depth estimation, or semantic scene segmentation enable to achieve the highest task performance.

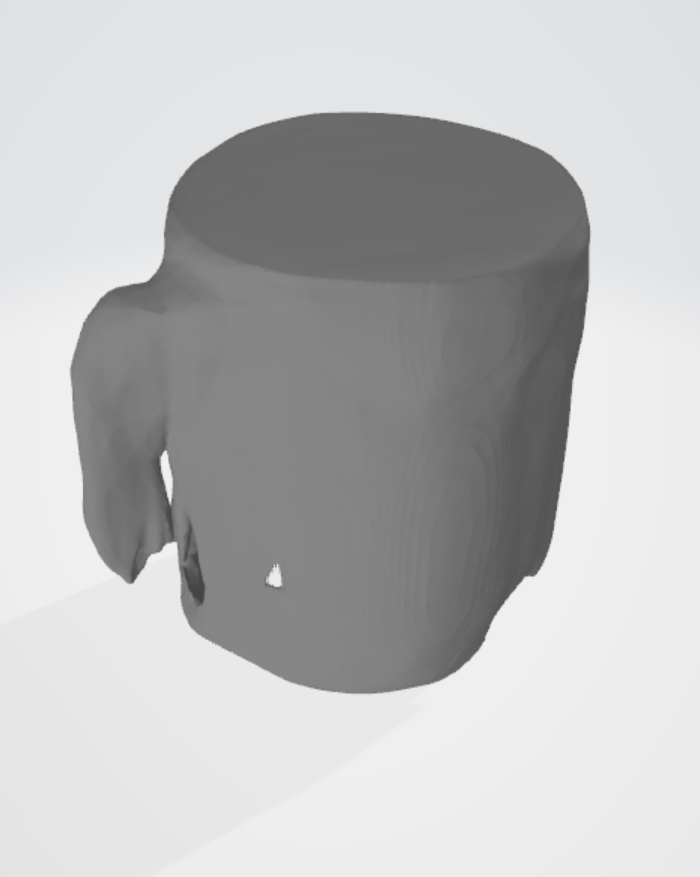

We proposed a modularisation of the learned task, which opens up black-boxes and make them explainable. We introduced a first algorithm that was capable to learn dexterous grasps of novel objects from just one demonstration. It models grasps as a product of independent experts – object-hand link contact generative models, which accelerates learning and provides a flexible problem structure. Each model is a density over possible SE(3) poses of a particular robotic link, trained from one or more demonstrations. Grasps can be selected according to the maximum likelihood of a product of involved contact models, and their relative poses controlled by manifolds in hand posture. Furthermore, we introduced an algorithm which can learn accurate kinematic predictions in pushing and grasping solely from experience, without any knowledge of physics. However, our current algorithms and models either do not involve prediction (in grasping), they are insensitive to task context and prone to occlusions, or they assume object exemplars (in prediction). In this project, we will overcome all these limitations, by introducing hierarchical models which rely on CNN features, and which can represent both visible and occluded parts of objects of both hand link-object and object-object contacts, and also the entire manipulation context. The CNN can be trained offline, while our hierarchical models can be efficiently trained during demonstration. Furthermore, we will enable learning the quantitative generative models of SE(3) motion of objects for grasping, pushing and pulling actions. They can be trained from demonstration and experience from depth and RGB images, all within the same one algorithm. Finally, we will attempt to model dynamical properties of contacts, and learn from experience a maximum resistible wrench, a maximum wrench that does not break hand-object contact. It would enable prediction of slippage for a given hand-object contact for pushing, pulling and grasping.

/

Principal Investigator (fellow): dr Marek Kopicki, e-mail: marek.kopicki@put.poznan.pl, tel: 616652809

Mentor: prof dr hab. inż. Piotr Skrzypczyński

Investigators: Jakub Chudziński, Piotr Michałek

OUR RECENT ACTIVITY IN BRIEF

New lab dedicated for advanced grasping & manipulation has been established with a Franka Emika Panda (FR3) manipulator

The first milestone is a short paper submitted to PP-RAI 2023

FORMAL ASPECTS OF THE PROJECT

This research is part of the project No 2021/43/P/ST6/01921 co-funded by the National Science Centre and the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 945339.

This project is affiliated at the Robotics Division,

Institute of Robotics and Machine Intelligence

The Institute of Robotics and Machine Intelligence (IRIM) is a research unit of Poznan University of Technology focusing on modern, AI-based robotics, computer vision and embedded systems. In the last 5 years, the IRIM researchers published over 50 papers in top robotics, computer vision and machine learning conferences (e.g., IEEE IJCNN, IEEE IROS, IEEE ICRA, RSS, ICARCV), and journals, e.g., International Journal of Robotics Research, IEEE Robotics and Automation Letters, Robotics and Autonomous Systems, Autonomous Robots, Industrial Robot: An International Journal, Journal of Intelligent and Robotic Systems, Engineering Applications of Artificial Intelligence. They also have more than 10 patents in the areas of computer vision and robotics. The contributions elaborated in publications have been applied in several R&D projects conducted with business partners. These projects include cooperation with large companies, e.g. contextual perception of the environment and localization of buses (with Solaris Bus & Coach), inspection of mine and underground tunnels using an autonomous legged robot (with KGHM Cuprum) manipulation of soft and bendable objects in industrial scenarios (with Volkswagen Poland), and numerous projects with the SME and start-up companies from the local ecosystem, e.g. Inwebit, Robtech, Vision4pro, Mandala. The research activities in IRIM, and particularly in the Robotics Division of IRIM, are focused on the modern approach to robotics, where autonomy and perception-based decision making are considered the key factors. This research agenda also leverages machine learning as a set of methods that make it possible to overcome the difficulties related to the traditional, model-based approach to state estimation, task/motion planning and high-level control in robotics. Contrary to viewing robotics as another application domain for machine learning, learning embodied agents are considered as an important driving force for the advancement of machine learning technology. The research topics at the Robotics Division are revolving around this idea, although they cover a broad range of applications, including autonomous driving and advanced driver assistance systems (ADAS), indoor navigation and positioning, simultaneous localization and mapping (SLAM), motion planning for legged robots and manipulators, visual and range-based perception for scene interpretation and semantic understanding. Advanced manipulation naturally emerged from these research interests, leading to several B&R projects, including mobile manipulation with advanced perception and scene understanding (NCBiR LIDER, NCN SONATA) and manipulation of non-rigid objects in industrial scenarios (NCBiR LIDER, H2020 REMODEL).